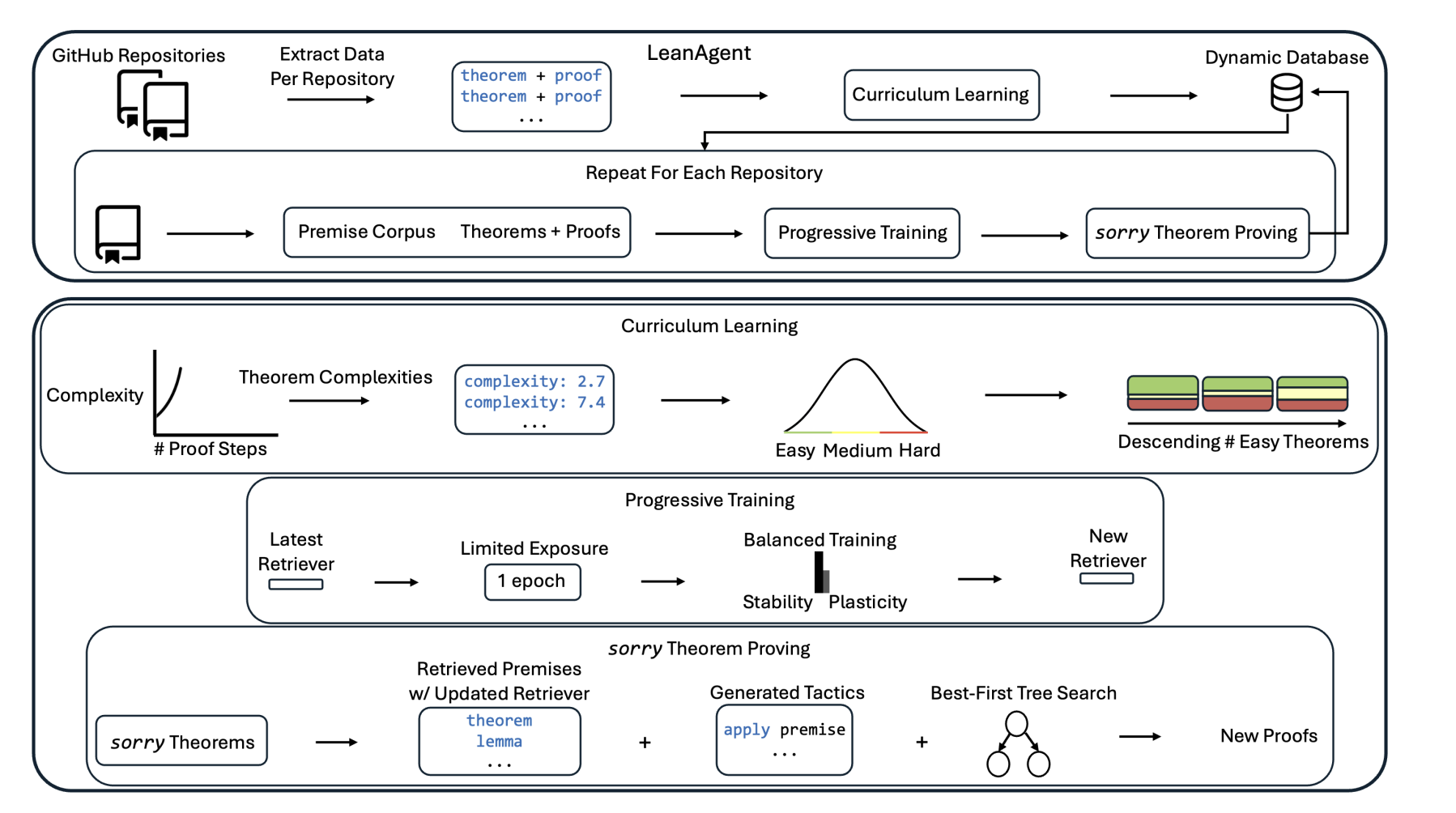

Curriculum Learning Strategy

LeanAgent employs a sophisticated curriculum learning approach that uses theorem complexity based on e^S (where S is the number of proof steps) to order repositories by difficulty. It calculates the 33rd and 67th complexity percentiles to categorize theorems as easy, medium, or hard, then sorts repositories by their count of easy theorems to create an optimal learning trajectory.

Dynamic Knowledge Database

The system maintains a comprehensive dynamic database that tracks repository metadata, theorem categorization (proven, sorry proven, sorry unproven), premise files with imports, traced files, and detailed theorem information including proof states. This enables efficient reuse and management of evolving mathematical knowledge across repositories.

Progressive Training Architecture

LeanAgent implements progressive training that incrementally trains the retriever on newly generated datasets for exactly one epoch per repository to prevent catastrophic forgetting. This approach balances stability (retaining old knowledge) with plasticity (learning new concepts), achieving superior performance on lifelong learning metrics including backward transfer.

Best-First Tree Search with Retrieval

For sorry theorem proving, LeanAgent uses a best-first tree search that generates tactic candidates at each step, retrieves relevant premises based on similarity to the current proof state, and applies dependency filtering to ensure only accessible premises are considered. The search continues until a proof is found or the 10-minute time limit is reached.